2025-03-10 graphs lecture 13

[[lecture-data]]

1. Graph Signals and Graph Signal Processing

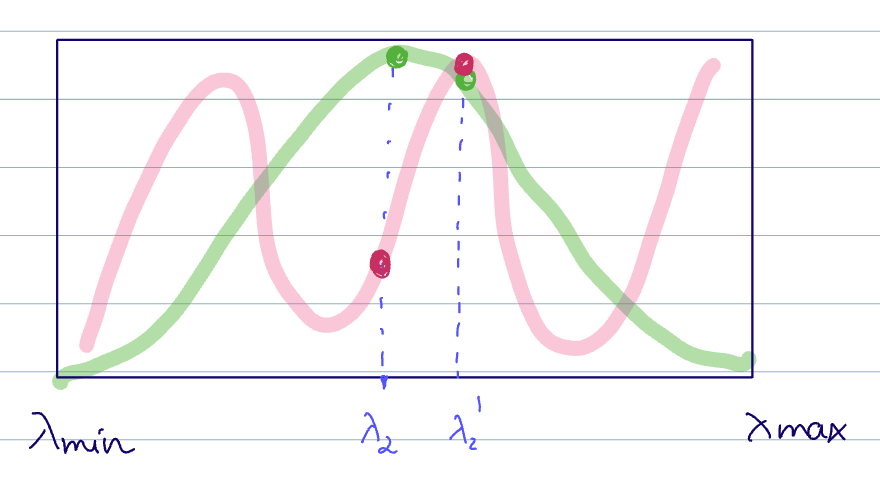

Recall the definition for an integral Lipschitz filter

Notice that the filter becomes flat at high frequencies

- At low

, they can be arbitrarily thin. - At medium

, these filters are approximately lipschitz filters. - At high

, they become flat and lose discriminability

Stability to different graph perturbations

Recall the types of perturbations on shift operators:

Unlike dilation, absolute perturbations (like the additive perturbations) do not take edge weights into account but can model changes in edge existence. This is fine for unweighted graphs, but for weighted graphs, this renders them unmeaningful. We want something that both

- takes edge weights into account

- can model edge additions and deletions

ie a perturbation that can combine these two benefits.

given

ie

where

- dilation takes into account edge weights, but cannot model edge additions and deletions

- additive perturbations can model edge additions and deletions, but do not take into account edge weights

- relative perturbations take into account both edge weights and additions/deletions

stability for dilations

Let

ie, integral Lipschitz filters are stable to dilations/scalings

Via binomial expansion, we have

Recall that

Right multiplying each side by

Where

- the second line

equality holds since the are eigenvectors of and holds from the definition of integral Lipschitz filter when letting .

Thus

ie, the integral Lipschitz filter is stable to dilation

This is universal for graphs of any size, ie any number of nodes.

This property of graph convolution is independent of the underlying graph.

This means that if we can control the Lipschitz constant

The filter is still non-discriminative at high frequencies. This is the tradeoff for having stability in graph convolution.

see integral lipschitz filters are stable to dilations

stability to additive perturbations

Let

Suppose

Where

ie, graph convolutions are stable to additive perturbations, provided they have Lipschitz spectral response.

Left as an exercise

Check Gama et al. 2019.

The statement becomes

This means we have lipschitz stability to additive perturbations when

- this is not bad for small

, but terrible for large graphs unless for . - this holds for all graphs of size

. ie, this is a property of the graph convolution and will be true regardless of the actual underlying graph - Similar to filters that are stable to dilation,

can be controlled by design or by penalizing large values.

see Lipschitz filters are stable to additive perturbations

stability to relative perturbations

Let

This tells us that the edge changes in the perturbed graph is tied to the degrees of the nodes.

see relative perturbation edge changes are tied to node degree

Suppose

Where

ie, integral Lipschitz filters are stable to relative perturbations

This is the same bound as the one we got for lipschitz graph filters on additive perturbations, just with an additional factor of 2. And just like that bound, we see that

- again, this is not bad for small

, but terrible for large graphs unless for . - this holds for all graphs of size

. ie, this is a property of the graph convolution and will be true regardless of the actual underlying graph - Similar to filters that are stable to dilation and additive perturbation, the Lipschitz constant

can be controlled by design or by penalizing large values.

The difference is that there is no tradeoff between stability and discriminability.

check Gama et al. 2019

see integral Lipschitz filters are stable to relative perturbations

additive perturbations are not very realistic, and so a lipschitz graph filter is enough to get stability.

If we have a perturbation that respects the graph sparsity pattern (models edge weights), we need an integral Lipschitz filter to get stability.

- However, there is a big downside for large graphs, since the bound depends on

.

see stability and size tradeoff for realistic sparsity pattern considerations setting

Generalization to GNNs

Generally, the stability properties of the convolutions will be inherited by their constituent graph filters.

Let

(1) if

(stable to dilation/scaling)

(2) If

(stable to additive perturbations)

(3) If

(stable to relative perturbations)

We begin with some non-restrictive additional assumptions:

- normalized input at all layers (easy to achieve with non-amplifying , ie ) activation function/nonlinearity is normalized Lipschitz, ie has a Lipschitz constant of 1.

Let

with

For each layer

We can apply the same reasoning to get a similar expression for

see GNNs inherit stability from their layers

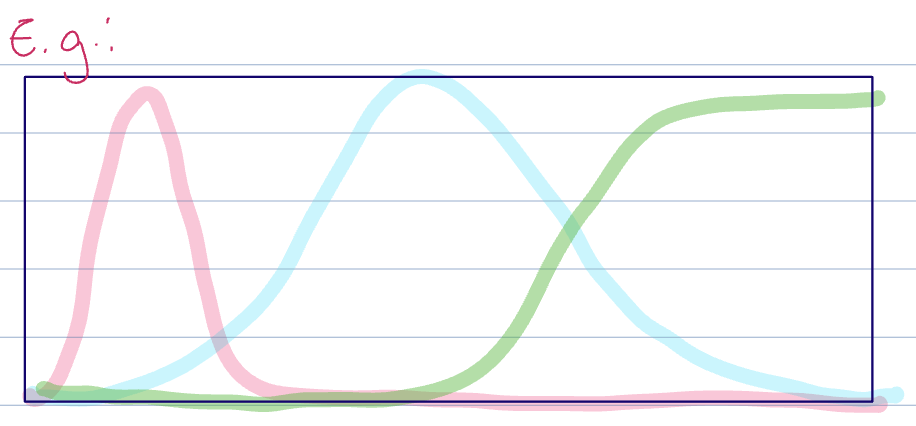

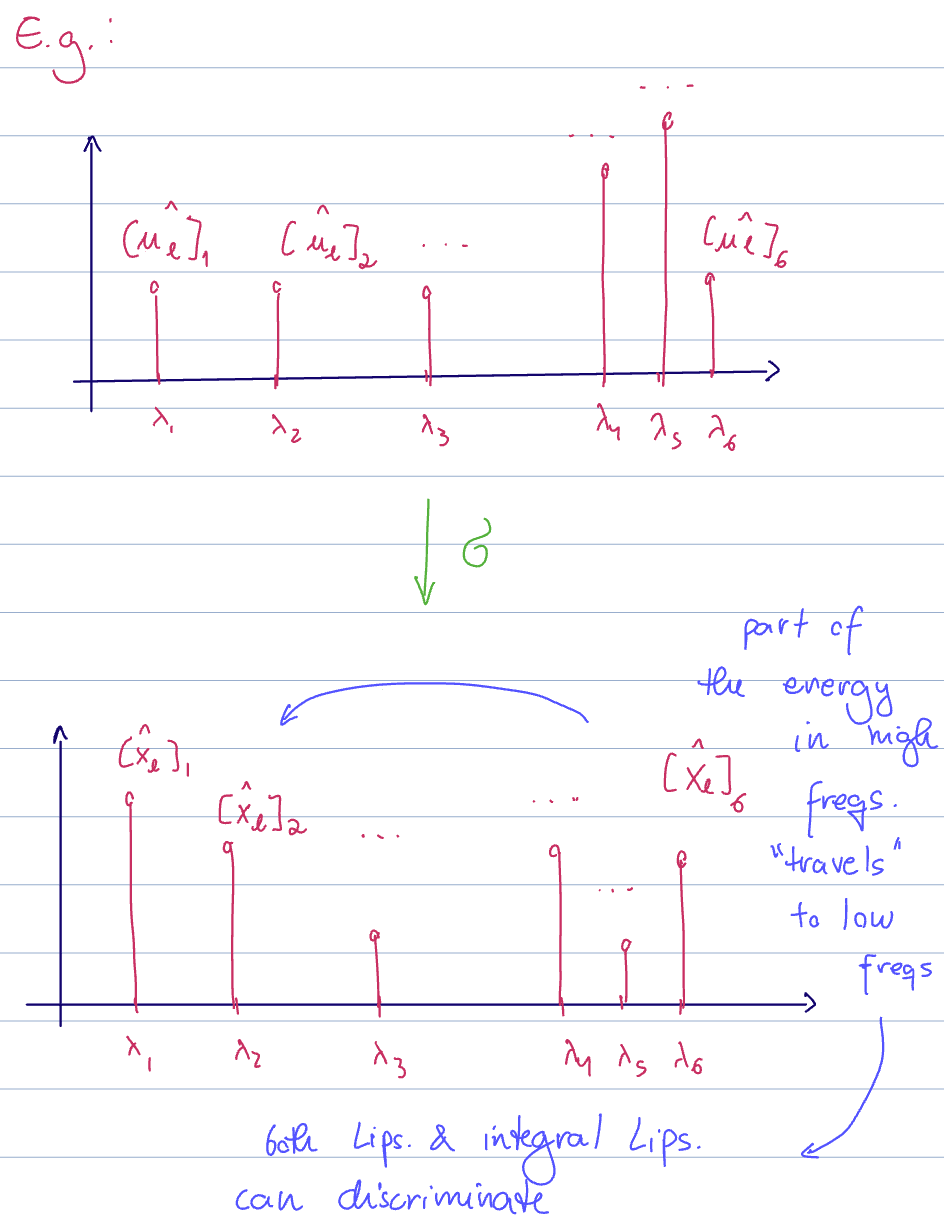

In the node domain, the nonlinearity does not affect the signal much. However, nonlinearities scatter the signal energy across the spectrum.

- we first get a response

from the spectral representation of a convolutional graph filter - after the nonlinearity, some of the energy modes to other areas of the spectrum

Some of the energy in high frequencies "travels" to low frequencies. Both lipschitz graph filters and integral Lipschitz filters can discriminate at these low frequencies.

If any of our task depends on high-frequency data, then this scattering can help us perform well by moving some of the high-frequency information into the lower frequencies, where we have both stability and discriminability

GNNs can perform better than their constituent graph filters. That is, they are more stable for the same level of discriminability, and more discriminative for the same level of stability than a direct composition of the same filters.

This is because the nonlinear activation function "scatters" the signal across the spectrum, moving some high frequency information to lower frequencies and vice versa. Since both lipschitz graph filters and integral Lipschitz filters can discriminate at low frequencies, the mixing from the nonlinearity helps the GNN account for a wider range of the information.