2025-03-05 graphs lecture 12

[[lecture-data]]

- expressivity with respect to the WL test

Today

1. Graph Signals and Graph Signal Processing

Pytorch Things

First: how dataloaders work

Sampling-based GNN training

(1) divide

- number of batches depends on

batch size, a hyperparameter you set

(2) forn batches do

for

for

note

- only embeddings of

are updated (not all of them)

Where

Loss and weights updates are only computed for the nodes in the batch

Last time intro to PyTorch - see Lecture 11 colab notebook

- torch_geometric vs hand-coding: representing graphs as edge lists

- convolutions more like sparse matrix multiplications

- many benchmarks available including Cora, paper citation network dataset

NeighborLoaderis a dataloader for graph nodes- requires training mask, typically included in canonical datasets

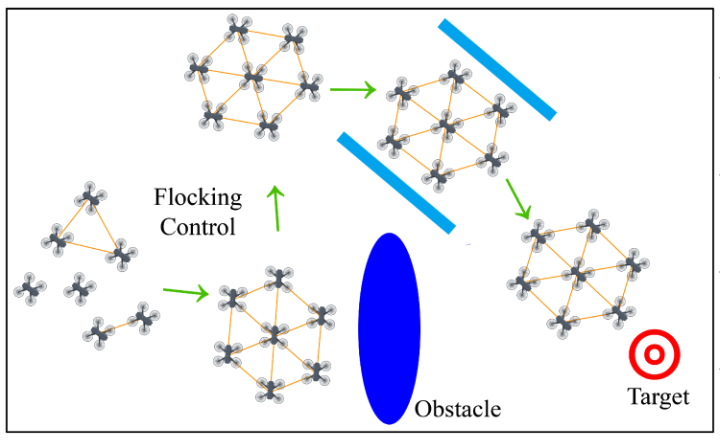

Stability of GNNs to graph perturbations

GNN outputs should be stable. Graphs might have small perturbations, but the outputs should vary as little as possible.

offline controller should still perform well in online trajectories

Recall that permutation equivariance functions as implicit data augmentation, ie, there are symmetries/structures automatically encoded into the data that we can utilize to have better models.

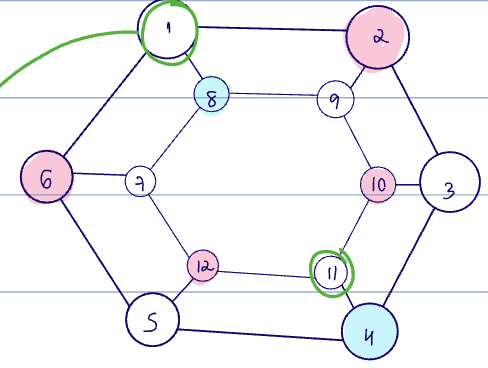

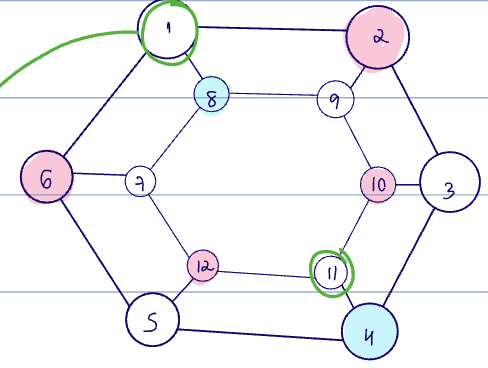

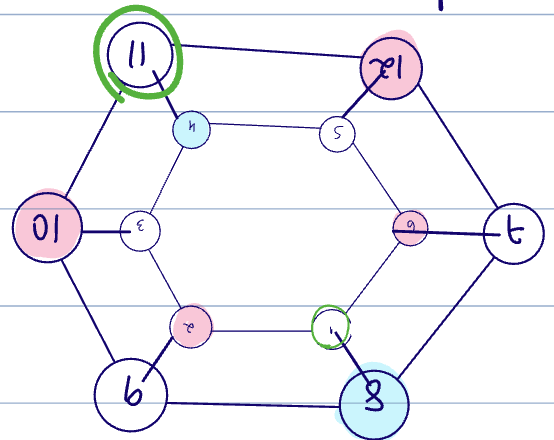

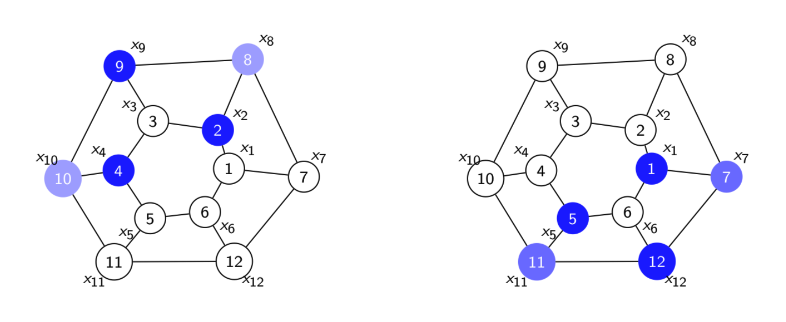

If we observe Node 1's label Node 11's, the GNN still learns to output Node 11 as Node 1. Why? The network learns an automorphism of the graph.

In the example above, the automorphism is

And this means that we don't need to know

Let

If there exists an automorphism for

In a learning task (in a GNN), this means that the shift operator

We typically want our GNNs to be invariant to automorphisms

In practice, most graphs don't have true automorphisms/symmetries, but quasi-symmetries.

Stability to perturbations ensures "data augmentation behavior" or permutation equivariance under not-quite-symmetries.

The operator distance modulo permutations for an operator

This can be defined for any norm on the LHS, but typically is the operator norm induced by the 2 norm.

For graphs, we can consider

see operator distance modulo permutations

When

Let

graph filters are equivariant to permutations

Recall the definition for a convolutional graph filter:

Let

If

For GNNs, we have equivalently

see filter permutation invariance

graph filter stability definitions

A function

or if

(see Lipschitz continuous)

The convolutional graph filter

- (1) if

, then - (2) if

, then

Because continuous linear functions are lipschitz continuous, graph convolutions are naturally lipschitz and stable to perturbations on both

graph convolutions are Lipschitz stable to perturbations in

This is because the convolutional graph filter

(see graph convolutions are stable to perturbations in the data and coefficients)

However, graph convolution is nonlinear in

Suppose we have a graph shift operator

Recall the spectral representation of a convolutional graph filter:

Let

This uses the idea that once the filter is fixed, we can think of the representation as sampling along a "spectrum polynomial". Typically,

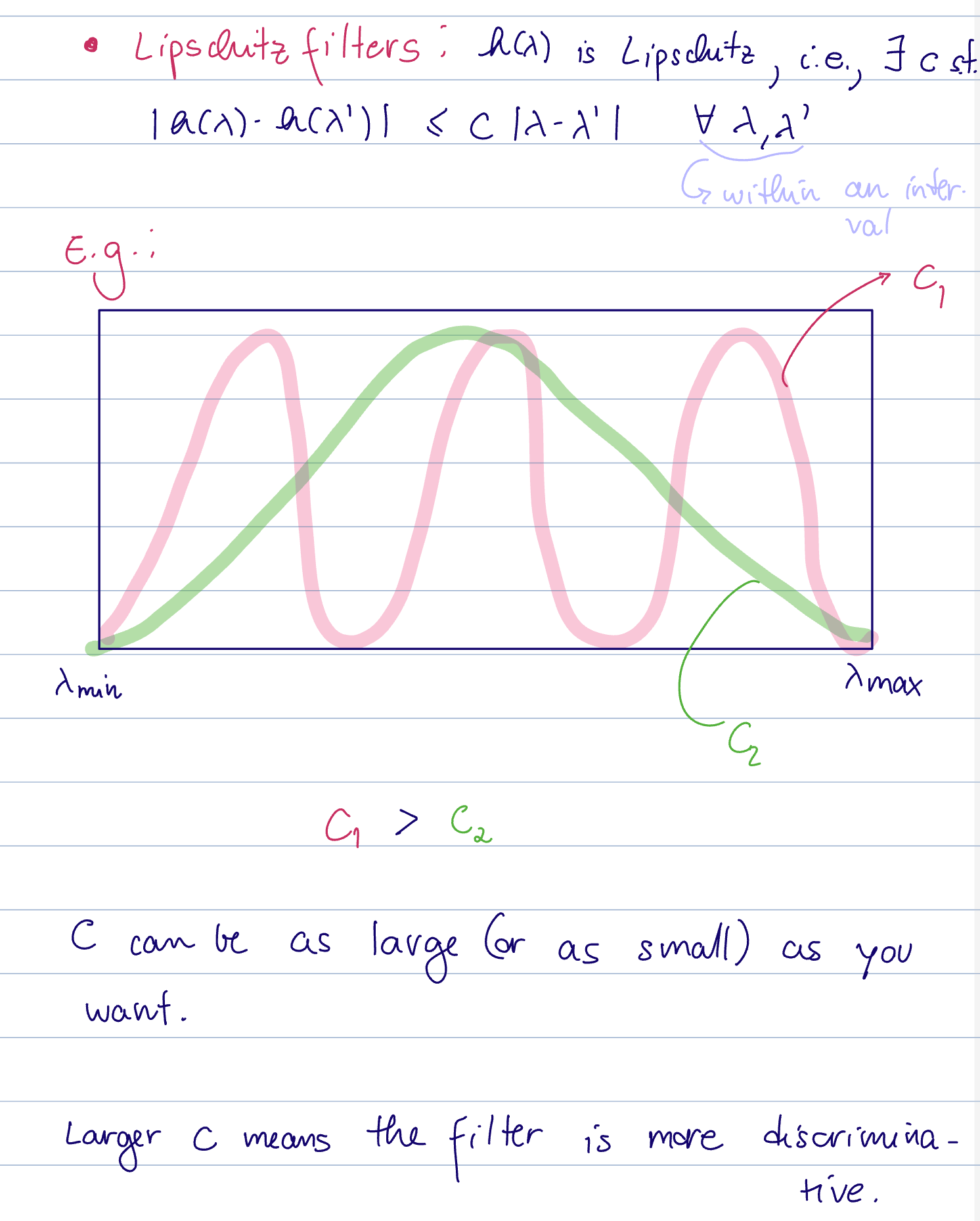

The discriminability of a graph filter describes the ability of the filter to tell the difference between different eigenvalues of the shift operator spectrum.

Recall that we can think of the spectrum as a polynomial in the eigenvalues of the shift operator.

The discriminability thus corresponds with

see discriminability of a graph filter

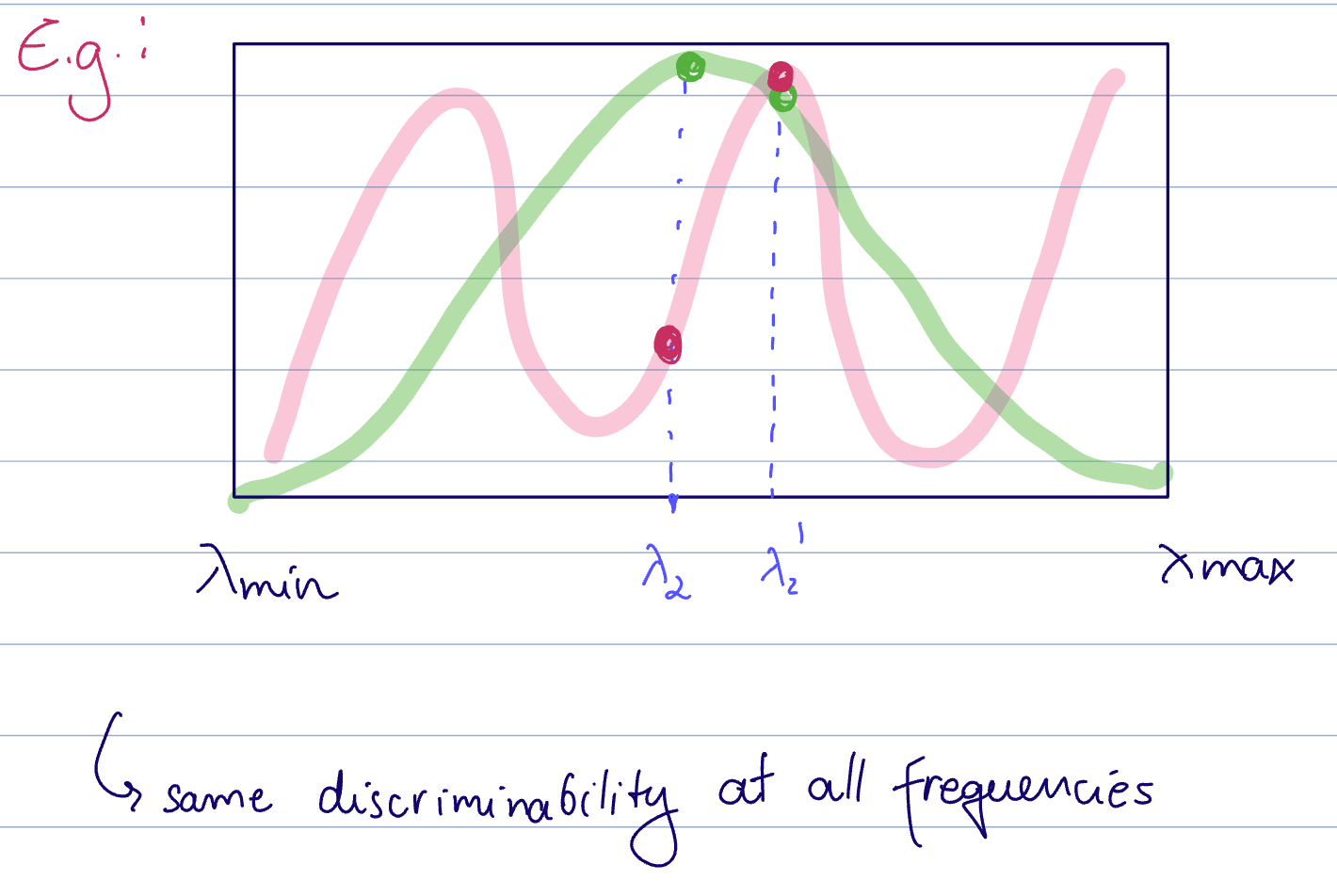

Note that the discriminability is the same at all frequencies. This means there is a tradeoff on benefits between having a large vs a small Lipschitz constant.

- with a small

, there is better stability for small perturbations on the eigenvalues - However, if there are large perturbations on the graph, it is good to be more discriminating to notice these changes.

Here, green has a smaller

- The green filter is stable and gives a response that is very close to at both

and - whereas the pink filter has a larger difference at the two sampled locations, but has better discriminability

Having a larger Lipschitz constant results in better discrimination between the responses, but less stability.

see stability-discriminability tradeoff for Lipschitz filters

A relaxed version of a lipschitz graph filter is an integral Lipschitz filter.

Let

ie,

Letting

We consider 3 perturbation types (first for graph convolutions, then for GNNs)

We can interpret this as the edges being scaled by

- [p] This is a reasonable perturbation model because the edges change in proportion to their values.

- [c] Cannot model edge additions or deletions

Let

That is, integral Lipschitz filters are stable to dilations.