2025-02-05 graphs lecture 5

2025-02-05

Table of Contents

Scribing

- graph convolutions and design

- Learning weights of graph convolution

- Empirical risk minimization problem, statistical risk minimization problem

-

3 problems

Today

- Graph Neural Networks

- graph perceptron

- multi-layer graph perceptron

- PyTorch (HW) FC NN

1. Graph Signals and Graph Signal Processing

Graph filters work ok for many things, but are limited to linear representations. Therefore, they lack expressivity if the task is complicated. ie, since these operations are only linear, we not able to represent more complex relationships.

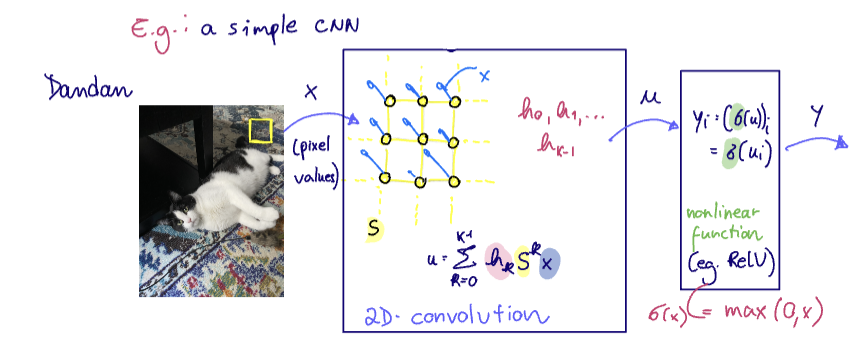

Thus, we need to add nonlinearities (ex. ReLU etc) to help with the expressivity of our model. These techniques have been implemented in CNNs for image processing and other domains. GNNs will extend the ideas seen in CNNs to the graph domain - graph convolution .

We can also think of any image as a graph, where the graph signals are the RGB values at each pixel, and the graph structure is determined by the pixel layout/grid of the image.

Let

ie a graph convolution followed by a pointwise nonlinear function

ReLU Sigmoid hypertangent

(see graph perceptron)

Is a graph perceptron local?

Yes, we can still write the perceptron in terms of the local nodes, since convolutional graph filters are local

A(n

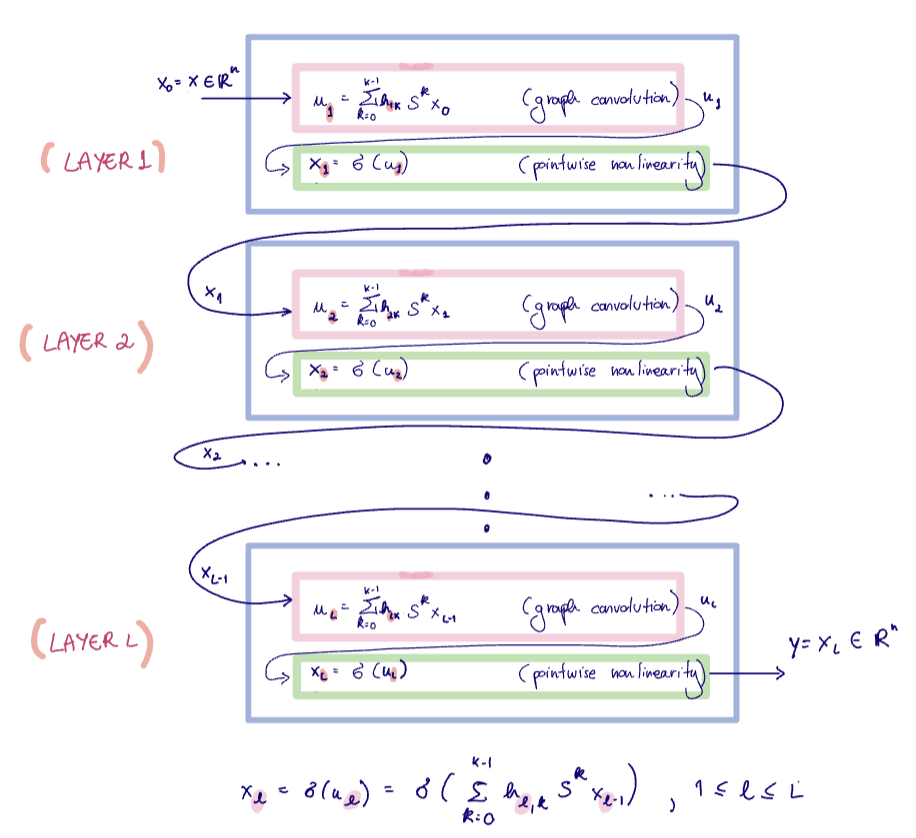

Where at each layer, $$x_{\ell} = \sigma(u_{\ell}) = \sigma\left( \sum_{k=0}^{K-1} h_{\ell,k} S^k x_{\ell-1} \right), ; 1 \leq \ell \leq L$$

We call

For conciseness, we define our weights

Full-Fledged GNNs

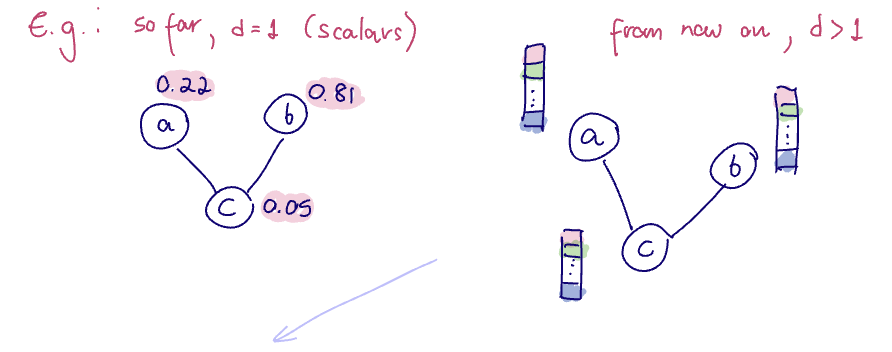

Real-world graph data is often high dimensional.

- in addition to having signal values at the nodes, we often have many features. That is, our signal

where is the number of nodes and is the number of features - (previously we have been looking at the case where

) - Thus it will be necessary for the embeddings

to be multi-dimensional. Note that we also may want embeddings to be multi-dimensional for higher expressivity/flexibility/better representations, not solely because it is necessary.

Suppose

In order to do this, we need graph convolution that take in multi-dimensional data.

Let

is still a graph diffusion/shift is a linear transformation mapping features to features

A "full-fledged" GNN layer is given by

Here,

(see Graph Neural Networks)